In the age of social shopping, we place a tremendous amount of faith in the opinions of our peers, and a tremendous amount of trust in the systems that gather and organize those opinions. We spend most of our time wondering whether the manufacturer’s claims are fake, taking for granted that the data we base our decisions on is accurate. Apparently, that isn’t something we should take for granted.

I am a very social shopper. I love to research my possible purchases and I tend to weigh the user reviews more heavily than editor’s reviews when it comes to judging real-world usage. For any technology-related purchase, my first stop is always CNET. I have trusted their knowledge and expertise for over a decade. Yesterday, while looking into a Black Friday deal on a Samsung SyncMaster S23A350H monitor, I wondered why the editors gave it a full 3 stars, but the user reviews gave it a lowly 2 stars.

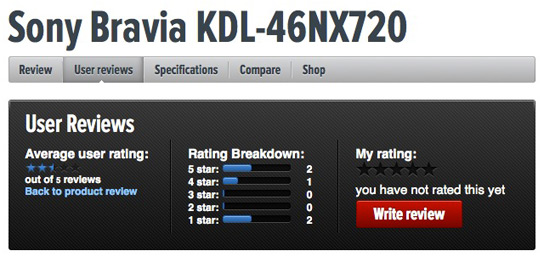

Something struck me as odd as I was looking over the reviews. They didn’t seem that bad, so I did the math. With only 5 reviews, it was easy math, but the result was a big surprise.

Based on the scores CNET lists, this monitor should have had a 2.5 star Average User Rating. On a 5-star scale, a half-star deficit is significant.

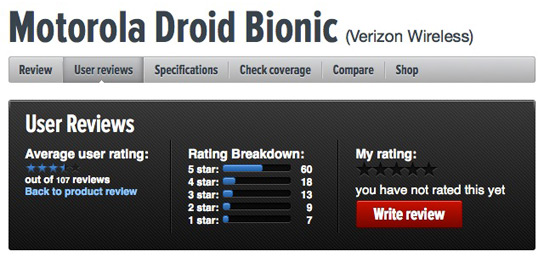

Before jumping to conclusions, I decided to spot-check a few more products. The news isn’t good. The poor Motorola Droid Bionic arguably suffers from an even more egregious shortage:

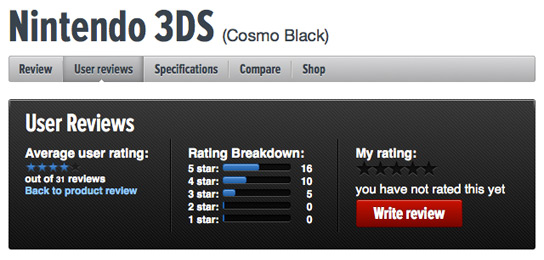

Any way you do the math, the Droid Bionic should be showing a full 4 stars, but the screenshot speaks for itself with 3.5 blue stars. My other two tests had similar shortages:

Every one of the Average User Reviews I checked is mathematically flawed. Sure, you could argue “if they’re all too low by the same amount, then it’s still an even playing field”, but the simple fact is that the absolute values can have a major impact on buying decisions. Additionally, consumers may be comparing data from various sources in their research. A missing half-star, in the context of the overall user opinions, could be the difference between buying and bailing.

Hopefully CNET will address this issue quickly (and if I hear from them that they have, I will gladly update this post to announce it). For manufacturers, the lesson in all of this is that you have to monitor the feedback and public opinions that are posted for your products, not just for quality, but for quantity and any aggregate scores that are being published about them.